In Machine Learning projects, it is common to generate multiple versions of the same model, ranging from simple changes in hyperparameters to modifications in the structure or architecture of the model, and even the use of different training datasets. All of this can result in a high number of executions that must be properly managed.

Proper organization of the different models, along with their metrics and configurations, is essential for evaluating which one offers the best performance and should be used, as well as for facilitating its management throughout the entire model lifecycle.

This type of management is part of the MLOps (Machine Learning Operations) paradigm, which incorporates best practices for reliably implementing and maintaining machine learning models in production. It ensures a comprehensible history of all applied models, indicating the meta-arguments used and the results of the validation tests.

This article explores GitLab’s integration functionality with MLflow to optimize the development and deployment process of machine learning models.

MLflow[1]

MLflow is an open-source platform designed to support teams and professionals working on machine learning projects. It focuses on the model lifecycle, ensuring traceability and reproducibility at every stage. MLflow is organized into four distinct components, which can be used individually or together:

- MLflow Tracking: An API that allows tracking parameters, code, and results from ML experiments. It also offers an interactive web interface to compare runs.

- MLflow Projects: A convention for organizing and describing code in a way that makes it reusable by teammates or automated tools.

- MLflow Models: A standard format for packaging machine learning models, facilitating their reuse and deployment across different environments.

- MLflow Model Registry: Acts as a model store, maintaining traceability of models along with their parameters.

GitLab Integration with MLflow

Recently, GitLab, a well-known platform offering powerful collaboration and version control tools, has added support for MLflow, allowing it to be used as a backend. Similar to code, it enables the publishing of models and their associated metadata directly in the GitLab registry. Furthermore, model results can be transparently reviewed directly from GitLab’s web interface.

The integration is carried out using GitLab’s Model Registry[2] and Model Experiment Tracking[3] components, which are fully compatible with the MLflow Tracking API, and can be used in code via the MLflow Client[4].

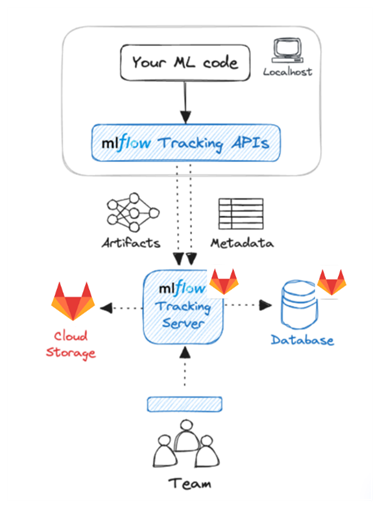

The following diagram illustrates how GitLab integrates with the MLflow Tracking component: code registers parameters, metrics, and artifacts (such as the model itself) via the MLflow API, and these are stored in GitLab, accessible and visible to the entire team. Experiment metrics and metadata are saved in GitLab’s database, while artifacts—being large files and objects—are stored in GitLab’s Package Registry.

Workflow Example

Below is an example of how the workflow functions when working with ML models using GitLab’s integration with MLflow. First, however, it is necessary to distinguish between GitLab’s Model Registry and Model Experiment Tracking components.

The Model Registry component serves as a repository for managing machine learning models throughout their lifecycle. It allows comparing model versions and monitoring their evolution over time.

The Model Experiment Tracking component allows for the tracking of machine learning experiments using the MLflow client. Experiments are associated with models stored in the Model Registry. When an MLflow run is created in code for an experiment, it can be linked to an existing registered model in the Model Registry, or a new one will be generated along with its associated experiment, named “[model]” followed by the new model’s name.

An experiment is a collection of different model runs that are comparable to each other, typically sharing the same set of parameters and evaluation metrics.

After running a new model, different artifacts—such as transformers, encoders, and the model itself—are stored in the Artifacts section of the experiment. Meanwhile, training metrics (e.g., precision, recall, accuracy, etc.) are recorded in the Performance section.

By using the GitLab integration with MLflow, you can track all models along with their corresponding experiments, parameters, metrics, and artifacts directly within GitLab, with full availability for the entire team at all times.

The Model Registry component can be used for deploying a model to production. The selection of the model version to deploy is still a manual process based on the evaluation of the obtained metrics. Once the user selects a successful run to use for inference, they should go to the specific experiment and click the Promote button to create a new version of the model, which will then be stored as a new version in the Model Registry. The version of the model used for inference will be the latest available in the Model Registry.

Although having models in the repository is extremely useful, loading the model locally each time inference is required is inefficient, so a strategy can be followed to maintain a local copy of the model that is updated periodically or when a new version is created.

It is worth noting that the MLflow integration with GitLab is still under development, and some features are yet to be implemented. Therefore, future improvements in the model registration and promotion process for deployment are expected. However, this integration already provides highly useful functionality to keep a detailed, historical, and team-accessible record of all models and experiments within a project.

[2] https://docs.gitlab.com/user/project/ml/model_registry/

[3] https://docs.gitlab.com/user/project/ml/experiment_tracking/

[4] https://docs.gitlab.com/ee/user/project/ml/experiment_tracking/